BindWeave AI

Subject-Consistent AI Video Generation

A unified MLLM-DiT video model designed for single- and multi-subject prompts, delivering precise entity grounding, cross-modal integration, and high-fidelity generation.

BindWeave Video Showcase from ByteDance

Explore sample videos produced by BindWeave, ByteDance's subject-consistent video generation model built on cross-modal integration and transformer-based motion modeling.

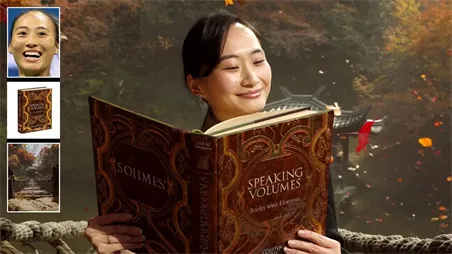

Single-Human-to-Video

With a single reference image, BindWeave transforms one photo into lifelike motion sequences. It preserves identity consistency across frames while naturally varying expressions, poses, and viewpoints — all guided by your creative prompt.

Multi-Human-to-Video

BindWeave enables realistic multi-character generation. Each subject keeps their distinct appearance and behavior, maintaining stable identities and natural interactions without visual drift or identity swaps.

Human-Entity-to-Video

BindWeave integrates people and objects in one coherent scene. It maintains per-subject and per-entity consistency, ensuring realistic physical interactions and smooth temporal coherence under occlusions or changing perspectives.

Key Features of BindWeave AI

Cross-Modal Intelligence for Subject-Consistent Video Generation

Cross-Modal Integration for Fidelity

BindWeave uses multimodal reasoning to fuse textual intent with visual references, ensuring the diffusion process remains faithful to your subjects — a cornerstone of the MLLM-DiT architecture.

Single or Multi-Subject Consistency

From one actor to multiple characters, BindWeave preserves identity, role, and spatial logic across scenes, keeping your visual narrative coherent from start to finish.

Entity Grounding & Role Disentanglement

BindWeave understands who's who, what they're doing, and how they relate — preventing attribute leakage and character confusion in complex prompts.

Prompt-Friendly Direction

Specify your creative vision with camera flow ("wide → mid → close-up"), wardrobe, or action cues. BindWeave interprets them as structured subject-aware guidance during generation.

Reference-Aware Identity Lock

Upload one or more reference images and BindWeave keeps your characters visually consistent across every scene and take.

Designed for Creative Workflows

BindWeave outputs clips ready for integration into NLEs or production pipelines — perfect for ads, explainers, trailers, and social video content.

BindWeave AI Application Scenarios

Real-World Uses for BindWeave's Subject-Consistent Generation

Advertising & Social Spots

Keep the same brand ambassador across every version and regional edit with BindWeave's identity continuity.

Product Demos

BindWeave allows presenters to remain visually identical while changing backgrounds, props, or camera angles.

Education & Learning

Use BindWeave to maintain the same instructor avatar throughout multiple course modules for cohesive e-learning content.

Trailers & Teasers

Generate multi-character storylines that remain visually and contextually consistent across shot transitions.

Creator Shorts

For vloggers and creators, BindWeave ensures identity accuracy and smooth motion across every cut and scene.

Localization

Swap language tracks or subtitles while BindWeave keeps your on-screen talent consistent, ensuring localized videos stay on-brand.

Loved by Creators Worldwide

Real feedback from professionals using BindWeave

"BindWeave lets me maintain my characters' appearance and continuity across every shot. What used to take me days can now be done in hours."

"In our e-learning platform, BindWeave helps us keep the same instructor avatar through lessons, improving engagement and consistency."

"BindWeave makes localization effortless. We switch languages and still keep the same on-brand character across campaigns."

"With BindWeave, text and visual prompts align perfectly. The identity-locking system gives us reliable creative control during previsualization."

"BindWeave's MLLM-DiT framework is one of the cleanest implementations of subject-consistent generation — it's both academically solid and production-ready."

Alternatives to BindWeave

Explore popular AI video generation platforms and see how they compare to BindWeave in identity consistency, multi-subject reasoning, motion fidelity, and cross-modal prompt alignment.

| Platform | Strengths (Pros) | Limitations (Cons) | Best For |

|---|---|---|---|

| BindWeave | Multi-subject identity preservation, cross-modal integration, MLLM-DiT architecture, stable long-sequence motion | Newer ecosystem; requires well-structured prompts | Creators who need identity-consistent, multi-subject storytelling |

| Hailuo AI | Fast text-to-video and image-to-video generation, strong prompt adherence, beginner-friendly | Limited multi-subject consistency; short-sequence focus | Marketers and creators producing quick, affordable short-form videos |

| Seedance 1.0 Pro | Cinematic quality, multi-shot sequences, realistic motion and lighting | Requires high-end hardware; steeper learning curve | Filmmakers and studios seeking cinematic, high-fidelity output |

| Runway Gen-3 Alpha | Large ecosystem, templates, integrations, fast creative prototyping | Limited identity consistency; subscription cost | Teams producing branded content or social media campaigns |

| Kling AI | Strong motion physics, smooth transitions, 1080p realistic output | Limited English prompt support; multi-subject control varies | Creators prioritizing realistic motion and natural camera behavior |